Embedding models

This conceptual overview focuses on text-based embedding models.

Embedding models can also be multimodal though such models are not currently supported by LangChain.

Overview

Imagine being able to capture the essence of any text - a tweet, document, or book - in a single, compact representation. This is the power of embedding models, which lie at the heart of many retrieval systems. Embedding models transform human language into a format that machines can understand and compare with speed and accuracy. These models take text as input and produce a fixed-length array of numbers, a numerical fingerprint of the text's semantic meaning. Embeddings allow search system to find relevant documents not just based on keyword matches, but on semantic understanding.

Key concepts

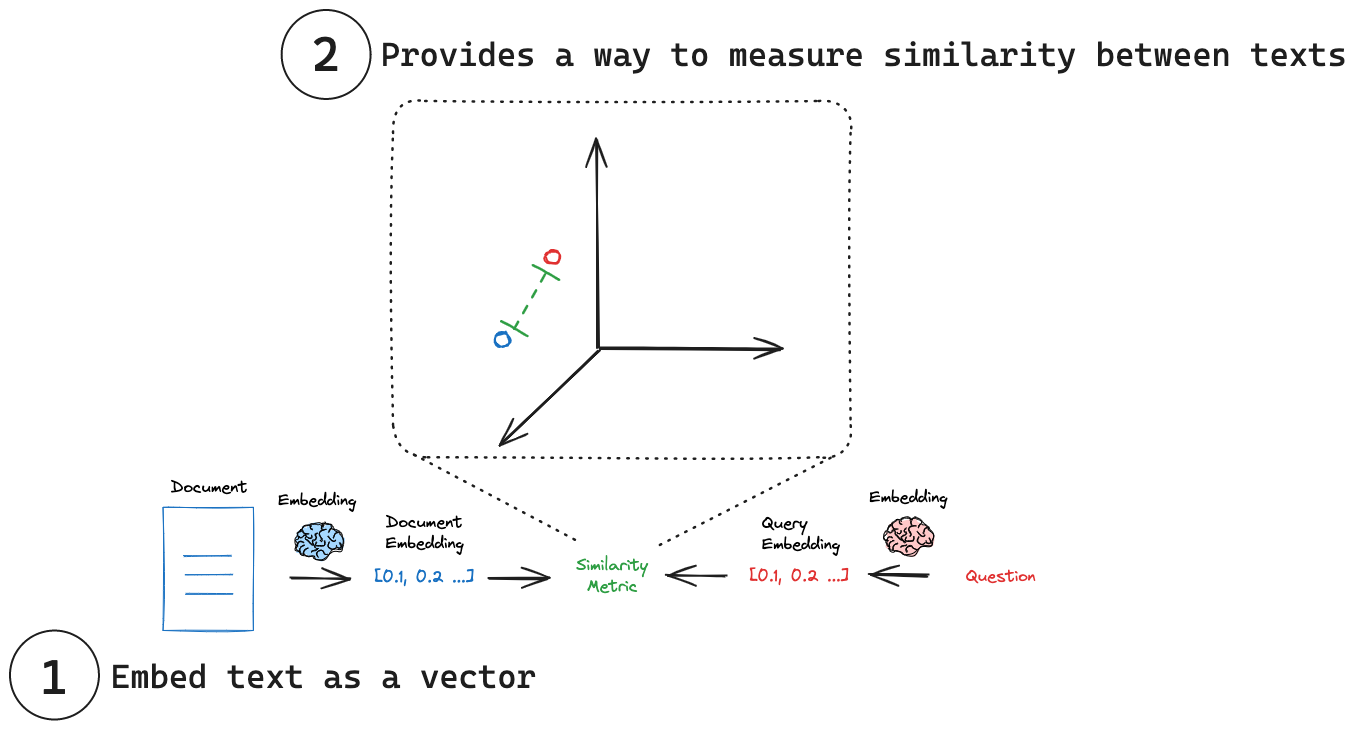

(1) Embed text as a vector: Embeddings transform text into a numerical vector representation.

(2) Measure similarity: Embedding vectors can be comparing using simple mathematical operations.

Embedding data

Historical context

The landscape of embedding models has evolved significantly over the years. A pivotal moment came in 2018 when Google introduced BERT (Bidirectional Encoder Representations from Transformers). BERT applied transformer models to embed text as a simple vector representation, which lead to unprecedented performance across various NLP tasks. However, BERT wasn't optimized for generating sentence embeddings efficiently. This limitation spurred the creation of SBERT (Sentence-BERT), which adapted the BERT architecture to generate semantically rich sentence embeddings, easily comparable via similarity metrics like cosine similarity, dramatically reduced the computational overhead for tasks like finding similar sentences. Today, the embedding model ecosystem is diverse, with numerous providers offering their own implementations. To navigate this variety, researchers and practitioners often turn to benchmarks like the Massive Text Embedding Benchmark (MTEB) here for objective comparisons.

- See the seminal BERT paper.

- See Cameron Wolfe's excellent review of embedding models.

- See the Massive Text Embedding Benchmark (MTEB) leaderboard for a comprehensive overview of embedding models.

LangChain Interface

Today, there are many different embedding models. LangChain provides a universal interface for working with them, providing standard methods for common operations. This common interface simplifies interaction with various embedding providers through two central methods:

embed_documents: For embedding multiple texts (documents)embed_query: For embedding a single text (query)

This distinction is important, as some providers employ different embedding strategies for documents (which are to be searched) versus queries (the search input itself).

To illustrate, here's a practical example using LangChain's .embed_documents method to embed a list of strings:

from langchain_openai import OpenAIEmbeddings

embeddings_model = OpenAIEmbeddings()

embeddings = embeddings_model.embed_documents(

[

"Hi there!",

"Oh, hello!",

"What's your name?",

"My friends call me World",

"Hello World!"

]

)

len(embeddings), len(embeddings[0])

(5, 1536)

For convenience, you can also use the embed_query method to embed a single text:

query_embedding = embeddings_model.embed_query("What is the meaning of life?")

- See the full list of LangChain embedding model integrations.

- See these how-to guides for working with embedding models.

Measure similarity

Each embedding is essentially a set of coordinates in a vast, abstract space. In this space, the position of each point (embedding) reflects the meaning of its corresponding text. Just as similar words might be close to each other in a thesaurus, similar concepts end up close to each other in this embedding space. This allows for intuitive comparisons between different pieces of text. By reducing text to these numerical representations, we can use simple mathematical operations to quickly measure how alike two pieces of text are, regardless of their original length or structure. Some common similarity metrics include:

- Cosine Similarity: Measures the cosine of the angle between two vectors.

- Euclidean Distance: Measures the straight-line distance between two points.

- Dot Product: Measures the projection of one vector onto another.

As an example, any two embedded texts can be compared with cosine_similarity:

import numpy as np

def cosine_similarity(vec1, vec2):

dot_product = np.dot(vec1, vec2)

norm_vec1 = np.linalg.norm(vec1)

norm_vec2 = np.linalg.norm(vec2)

return dot_product / (norm_vec1 * norm_vec2)

similarity = cosine_similarity(query_result, document_result)

print("Cosine Similarity:", similarity)

- See Simon Willison’s nice blog post and video on embeddings and similarity metrics.

- See this documentation from Google on similarity metrics to consider with embeddings.

- See Pinecone's blog post on similarity metrics.

- See OpenAI's FAQ on what similarity metric to use with OpenAI embeddings.

Advanced

Embedding with higher granularity

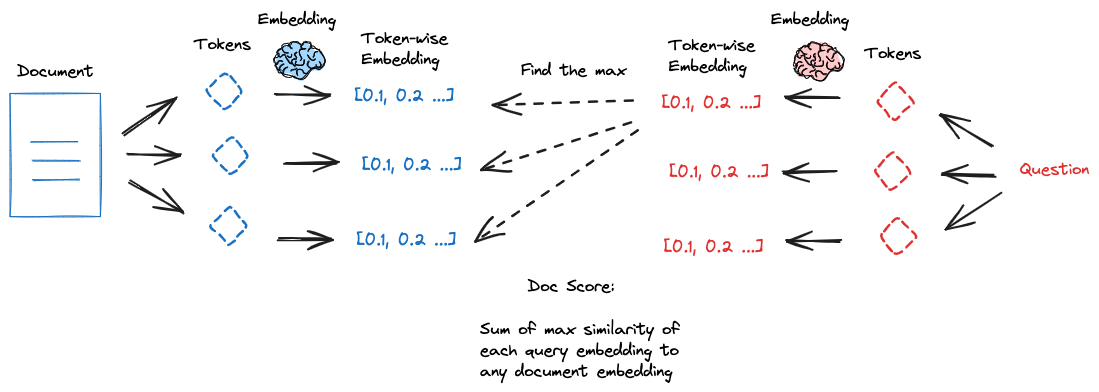

Embedding models compress text into fixed-length (vector) representations, which can put a heavy burden on that single vector to capture the semantic nuance and detail of the document. In some cases, irrelevant or redundant content can dilute the semantic usefulness of the embedding. ColBERT (Contextualized Late Interaction over BERT) is an innovative approach to address this limitation by using higher granularity embeddings. Here's how ColBERT works:

- Token-level embeddings: Produce contextually influenced embeddings for each token in the document and the query.

- MaxSim operation: For each query token, compute its maximum similarity with all document tokens.

- Aggregation: The final relevance score is obtained by summing these maximum similarities across all query tokens.

This token-wise scoring can yield strong results, especially for tasks requiring precise matching or handling longer documents. Key advantages of ColBERT:

- Improved accuracy: Token-level interactions can capture more nuanced relationships between query and document.

- Interpretability: The token-level matching allows for easier interpretation of why a document was considered relevant.

However, ColBERT does come with some trade-offs:

- Increased computational cost: Processing and storing token-level embeddings requires more resources.

- Complexity: Implementing and optimizing ColBERT can be more challenging than simpler embedding models.

| Name | When to use | Description |

|---|---|---|

| ColBERT | When higher granularity embeddings are needed. | ColBERT uses contextually influenced embeddings for each token in the document and query to get a granular query-document similarity score. Paper. |

See our RAG from Scratch video on ColBERT.